Is Your Identity Security AI-Proof?

- November 4, 2024

- Posted by: claudia

- Categories:

The article discusses the evolving threat of Artificial Intelligence (AI) in the realm of identity security, particularly focusing on AI impersonation fraud. It presents a concerning picture of how AI technologies have progressed from theoretical threats to practical tools utilized by cybercriminals across multiple industries. The emergence of AI-driven attacks emphasizes an urgent need for effective defenses, suggesting that traditional methods may no longer suffice against such advanced threats.

Recent occurrences of AI impersonation fraud illustrate the seriousness of the situation, highlighting that attackers are adept at exploiting available technologies, which has drastically simplified their operations while also reducing costs. The implication is that organizations must adapt quickly to mitigate these risks, particularly as AI continues to enhance the capabilities of fraudsters.

Current solutions, like deepfake detection tools and user training programs, are presented as insufficient due to several inherent flaws. The constant back-and-forth between deepfake generation and detection in what has been termed an “AI arms race” means that neither side achieves lasting dominance. Furthermore, probabilistic detection methods leave significant room for error, and the expectation for end-users to rely on their judgment further complicates the situation, as fraudulent tactics grow increasingly sophisticated.

A shift in perspective regarding defenses against AI threats is proposed, suggesting that these impersonation attacks stem from vulnerabilities in identity security. Successful attacks require that fraudsters first compromise a legitimate user’s identity. In contrast to traditional detection methods, a secure-by-design identity platform can provide robust assurances of a user’s identity through cryptographic means and compliance with device security.

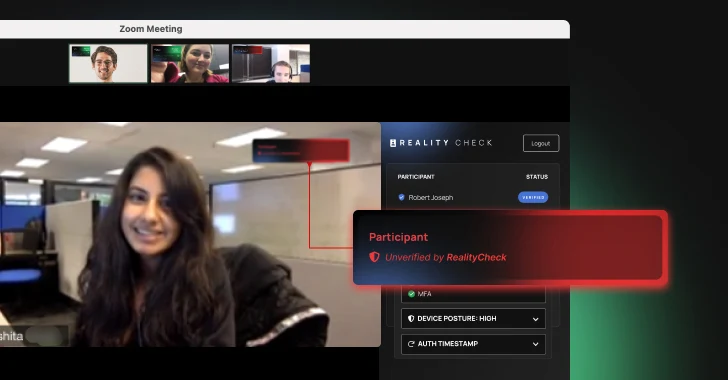

The article introduces “RealityCheck,” a new offering from Beyond Identity as an extension of their identity security platform. RealityCheck is designed to combat AI deepfake fraud by ensuring strong identity assurance and compliance with device security standards. It enhances security for virtual interactions, such as video conferencing, by providing visual confirmation of user identity, thereby helping to establish trust among users.

Key advantages of the proposed secure-by-design identity platform include the use of phishing-resistant credentials, device recognition, and the ability to assess risk holistically through real-time data signals concerning users and devices. This multi-faceted approach aims to decisively eliminate identity-based attacks.

Beyond Identity asserts that ensuring both identity and device security is crucial for thwarting AI deepfake fraud. By providing users with a tamper-proof visual badge that communicates these assurances, RealityCheck facilitates safer collaborations and helps mitigate the impact of impersonation threats. Integration with popular communication platforms, including Zoom and Microsoft Teams, with additional services planned for Slack and email, is a significant feature of this solution.

Overall, the article emphasizes the need for organizations to adopt a comprehensive identity security strategy that proactively addresses both existing and emerging threats, such as AI impersonation fraud. By integrating such technologies and practices, businesses can bolster their defenses against identity risks effectively. The call to action suggests exploring personalized demonstrations of Beyond Identity’s offerings to understand their practical implications for securing organizational communications against evolving cyber threats.